How often do you use Google Analytics to figure out what’s working, and what’s not in your marketing strategy?

If you genuinely invest in Digital Marketing, then your answer will be – quite regularly, in fact. So, let’s pretend you’re looking at traffic growth over the last six months. In the last two weeks, you’ve seen a dramatic increase in traffic figures. You do a happy dance, then sit down to see how much money those numbers are worth.

Except that you don’t find anything. Despite the fact that traffic has more than doubled, your dollar figures have remained relatively unchanged. If you’re not careful, you’ll start modifying your landing pages, possibly cutting your rates, and breaking things that are still working well when you should be looking for duplicate GA codes.

That’s how costly tracking errors in Google Analytics can be. But how do you correct a mistake you aren’t even aware of? That’s why we set out to discover the most common Google Analytics monitoring errors made by marketers.

Source- https://databox.com/google-analytics-tracking-mistakes

This blog will assist you in minimising data skewing issues by correcting the following errors:

The lack of a backup and the need to test views

Even if you only have one account and property, you should always have at least three separate perspectives:

- View from the top. With all of the required settings and filters applied, this is the one you’ll use the most.

- View in reverse. A view that has all of its options set to default. You’ll always have all the raw data here if anything goes wrong with your master view.

- View that is being tested. You can experiment with this one first to see how it works. It’s helpful if you’re not sure what changing more sophisticated display options, such as filters, will mean.

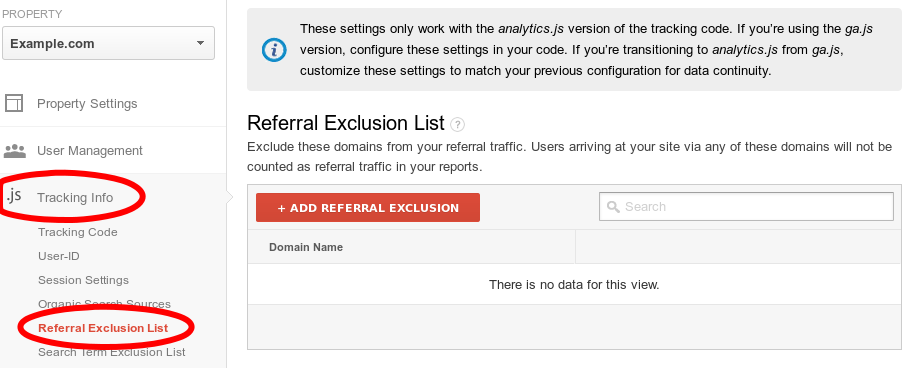

Not using a referral exclusion list

When you add a domain to a referral exclusion list, all traffic from that domain is no longer considered referral traffic and does not result in a new session being created.

This is particularly useful in three situations:

Payment gateways – If you utilize any third-party payment processors, your consumers will most likely be redirected there and back when they complete the transaction. That should be a single session originating from a single source of traffic.

Tracking of subdomains – Subdomains are distinct hostnames, thus traffic from them would inevitably result in a new referral session. Fortunately, when you create the property, GA adds your own domain to the list. Leave it at that. If you ever encounter this in the tracking code or GTM, keep the default “Cookie Domain: Auto” as well.

Cross-domain tracking – If you’re in the same business, you may have microsites and other different domains for which you’d need integrated data.

Source- https://i.stack.imgur.com/4SQYm.png

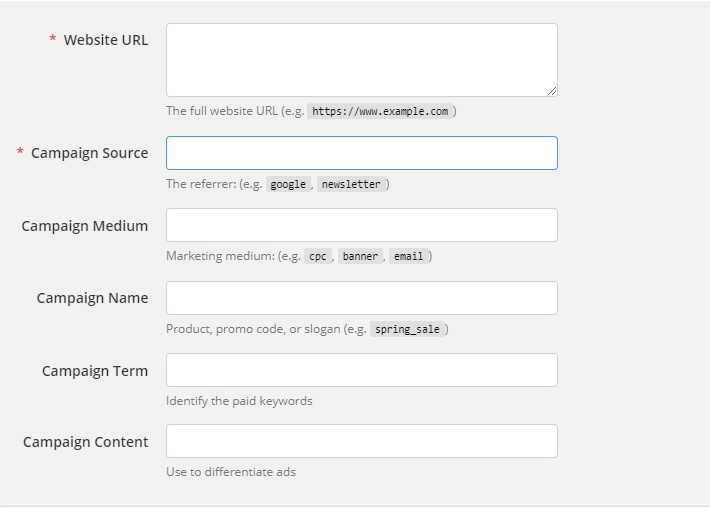

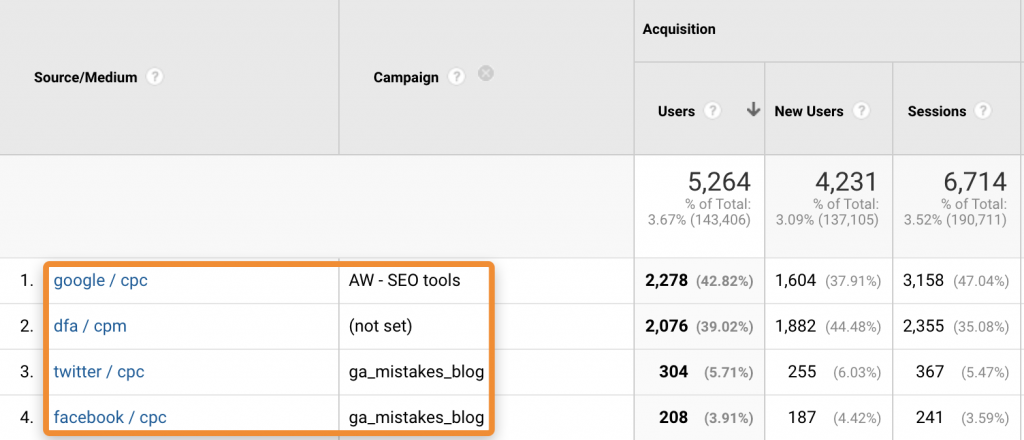

Using UTM parameters incorrectly

UTM parameters are tags that are added to URLs to identify different types of traffic. They’re mainly used with paid advertisements and links that would otherwise be mixed in with organic traffic.

Let’s pretend we’re running Twitter advertisements. The traffic would be classified as “twitter.com / referral” by default, making performance analysis impossible. As a result, we attach UTM parameters to Twitter Ads URLs:

Source- https://unyscape.com/wp-content/uploads/2021/02/image6.jpg

These UTM parameters are then transferred to Google Analytics servers and used in the dimensions that they belong to. This is important to create adjustments in your digital content

strategy.

Source- https://ahrefs.com/blog/wp-content/uploads/2020/03/11-utm-ga-1.png

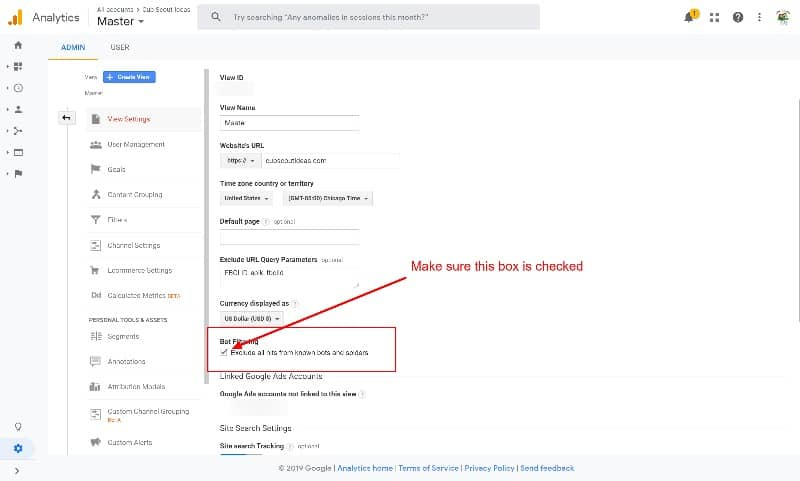

Not using bot filtering

Google can detect a significant part of the spam/bot traffic that your website receives. It’s as simple as checking a box. This can be found under Admin > View Settings:

It’s important to note that you only need to check this for your primary analytics view. This isn’t necessary for the raw or testing views.

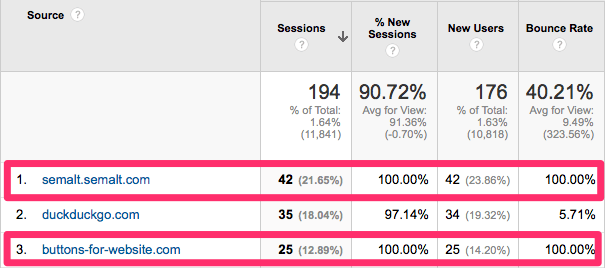

Popular websites attract spammy links. It’s simply the way things are

Most of these are negligible and bring minimal referral traffic, while some can send thousands of spammy referrals every day.

Set the date range to three months minimum, then go to the Referrals report (Acquisition > All Traffic > Referrals) to see if this is a problem for you.

Look for dodgy domains with a lot of backlinks.

Suspicious domains should not be clicked since they may contain malware or spyware. Instead, make a list and use a filter to exclude them (Admin > Filter). Set the Filter Field to “Campaign Source,” then list domains separated by a pipe (|) character in the Filter Pattern field.

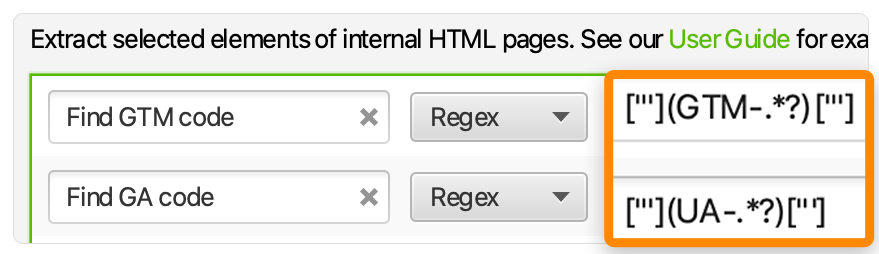

Google Analytics code is missing or duplicated

This may seem insignificant, but it’s a regular issue, especially on sites that employ multiple CMSs. The good news is that Google Analytics contains built-in notifications for missing codes. The bad news is that it’s slow, and it could take weeks for you to receive notifications about pages that are lacking code. It also fails to alert you to duplicate codes, which is a typical occurrence.

As a result, it’s advisable not to rely on Google’s notifications and instead use a service that permits custom extraction to crawl your site for issues. Here’s how to use Screaming Frog to create a crawl with custom extraction to scrape both Google Tag Manager and Google Analytics codes:

Source- https://ahrefs.com/blog/wp-content/uploads/2020/03/2-sf-regex-1.png

To inspect the data, it’s best to export the crawl. If the report has more columns, you may quickly filter out missing codes or discover duplicates, such as Find GTM code 1 and Find GTM code 2.

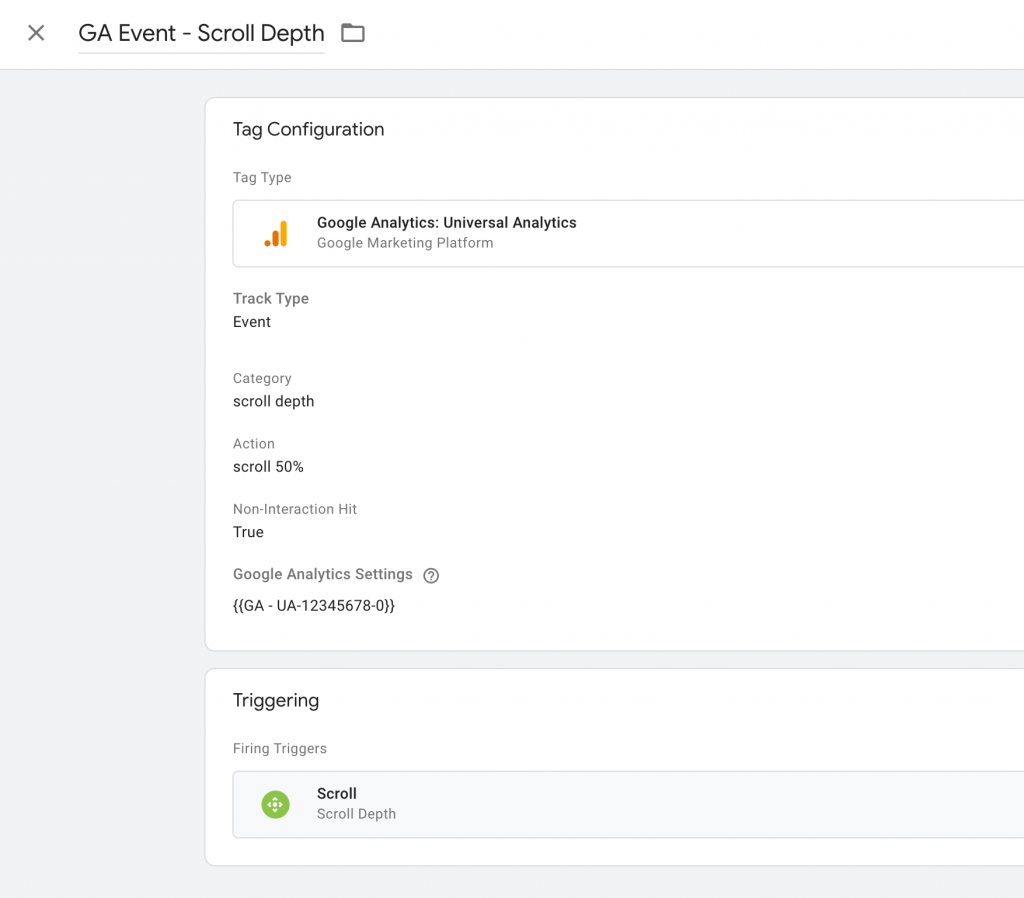

Interaction events that have been set up incorrectly

It makes it reasonable to build up interaction events for things like purchases, form submissions, and video plays. They’re crucial to your business, so it’s fine that they’re not counted as bounces—even if the visitor only views one page.

However, if you use interaction events to track events that happen automatically on each page, such as scroll depth tracking, you’ll get near-zero bounce rates throughout your entire website, which isn’t good.

Look for abnormally low bounce rates in GA to readily spot these issues. If you think interaction events are to blame, update the event’s “Non-interaction hit” setting in Google Tag Manager from false to true.

Source- https://www.getelevar.com/wp-content/uploads/2019/11/gtmscrolldepth.png

Add one extra line of code to the GA event snippet if you’re not using Google Tag Manager.

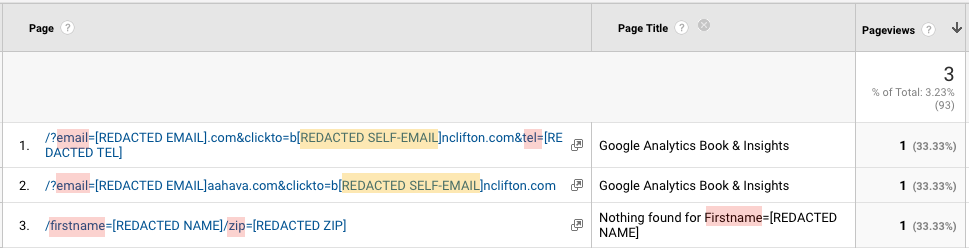

Tracking Personally Identifiable Information (PII)

While this has no effect on data clarity, it can be disastrous for your business. You must ensure that you are not monitoring any personally identifiable information (PII) such as emails, phone numbers, or names. Better yet, follow the data protection and privacy laws that relate to your company.

Unfortunately, by generating URL parameters with personal information in forms or elsewhere, you may be monitoring PII without even realising it.

Source –https://brianclifton.com/wp-content/uploads/2017/09/PII-redacted.png

If you’re using a major CMS, this won’t be an issue, but if you have a completely custom website, you should double-check. To state the obvious, custom dimensions should not be used to collect PII. Also, if you want to see what information websites collect, you can use a browser plugin.

Conclusion

Repeat the process of testing, verifying, and repeating. You should pretend to be a Quality Assurance engineer whenever you make changes to your GA settings, GTM, or tracking codes.

That means you’ll need to be familiar with source code, cookies, and a variety of debugging tools.

I suggest you use the following: Google Tag Assistant, Google Analytics Debugger, Dataslayer, WASP, Real-time reports in GA to immediately see the effects of fired tags, Debugging tools of any ad platforms that you use, e.g., Facebook Pixel Helper

The complexity of your tracking demands and code implementation determines the time it takes to implement, audit, and debug. Unless your tracking needs are minimal, I strongly suggest you switch to GTM if you aren’t already.

Yes, if you’re a newbie, learning will take a long time. However, the advantages are considerable. You won’t need to contact engineers to keep track of code changes, and having properly organised containers, tags, triggers, and variables is fantastic.

This Article is contributed by Himani, Founder of Missive Digital, an organic marketing agency that focuses on enhancing the brand positioning of the businesses to maximize ROI and brand loyalty through organic marketing channels. She specialises in strategizing, creating, and optimising content for users and SERP features like Featured Snippets.Being in this industry for the past 12+ years, she has helped SaaS and Technology businesses multiply their organic presence and conversions through organic marketing channels.